ELT vs Reverse ETL: An Architectural Overview

An engineer’s guide explaining the two paradigms — by community member, Arun Thulasidharan

“Does Reverse ETL need to exist as a new category or can it just be an extension of ELT?”

“Can current ELT solutions like Fivetran or Airbyte support a data pipeline with Snowflake as the source and Salesforce as the destination?”

Variations of these questions have shown up across data communities repeatedly, and even though most people echo the need for a single data integration solution for both ELT and Reverse ETL workflows, not much has been written about the design patterns and limitations of building a hybrid solution.

So when community member, Arun Thulasidharan from Castled asked me to provide feedback on this post, I had so many of my own questions answered that I convinced Arun to publish this guide on Data Beats.

Read on to gain a thorough understanding of the design patterns and the architectural differences between ELT and Reverse ETL.

And while this guide might appeal more to data people, it can help non-data folks evaluating data integration tools make more informed decisions.

Let’s dive in!

Key capabilities of a data pipeline solution

Data Ingestion: It should have an Ingestion or Extraction layer to capture the updates incrementally from a source

Data Sink: It should have a Sink or Load layer to batch update records from a source to a destination

Ordering and Deduplication: Since the same record can be updated multiple times at the source, a data pipeline solution should be able to sync the updates to the destination reliably and in order

Error Handling: The data sync on the destination can fail for reasons such as connectivity issues, schema incompatibility, etc. The data pipeline solution should be able to automatically retry the failed records and provide a failure report to the user

Let’s see how the implementation varies when the data warehouse sits on two sides of the data pipeline.

Data Ingestion

Both ELT and Reverse ETL solutions must capture incremental updates from the source and sync only the delta to the destination.

It is relatively straightforward to read incremental updates from a database or a SaaS application — the primary source in an ELT pipeline. For instance, all databases provide a write-ahead log that is used for recovery and replication purposes, and the same can be used to read incremental changes.

Data warehouses, on the other hand, are typically unable to capture changes.

While Snowflake has implemented Table Streams that can be used to capture table updates, it does not fit into a typical Reverse ETL use case as the standard input to a Reverse ETL pipeline is a query or dbt model, not a table.

The only way to handle change data capture in a data warehouse is to keep snapshot tables in the warehouse and on every run, compare query results with the respective snapshot table.

Data Sink

The data sink in an ELT pipeline typically requires transforming the source records in a format compatible with the target warehouse and storing those records intermittently in a cloud file storage like S3 or Google Cloud Storage.

A copy or load job is then triggered on the data warehouse referring to the file location, which can potentially update more than a million records in one go.

However, the typical destinations in a Reverse ETL pipeline are sales and marketing apps like Salesforce, Hubspot, or Intercom. The sink to these destinations mainly involves batching the records in-memory and making an API call to update a bounded number of records simultaneously — say 1000 via one API call.

Additionally, Reverse ETL destinations have other constraints like rigid rate limits and timeouts, making the data sync even more challenging.

Ordering and Deduplication

Data pipeline frameworks are complicated with multiple moving components, and maintaining the order of records across different components is not trivial.

ELT frameworks can get over this problem by using the analytical power of data warehouses to compare and ignore older records at scale. For instance, each record in an ELT pipeline has an ingestion timestamp that can be used to ignore stale updates at the time of the final data sync.

However, Reverse ETL destinations don’t have this privilege; hence, the Reverse ETL framework must be robust enough to handle these edge cases.

Error Handling

Data pipelines typically use an error store (or dead letter queue) that stores the failed records and processes them automatically once the error is resolved.

However, this can result in data inconsistency if stale updates are processed — such as an old record overwriting the latest version of that record in the warehouse, leading to deduplication/ordering issues that are not trivial to solve in a Reverse ETL solution.

Reverse ETL pipelines can work around this issue by sending the failed records back to the ingestion layer, where they will be accounted for in the subsequent pipeline runs. Therefore, Reverse ETL pipelines require both ingestion and sink layers to work together to handle the retry and ordering of failed records.

Proposed frameworks for ELT and Reverse ETL

Now let’s try to design frameworks for ELT and Reverse ETL, factoring in all the use-cases and limitations mentioned above.

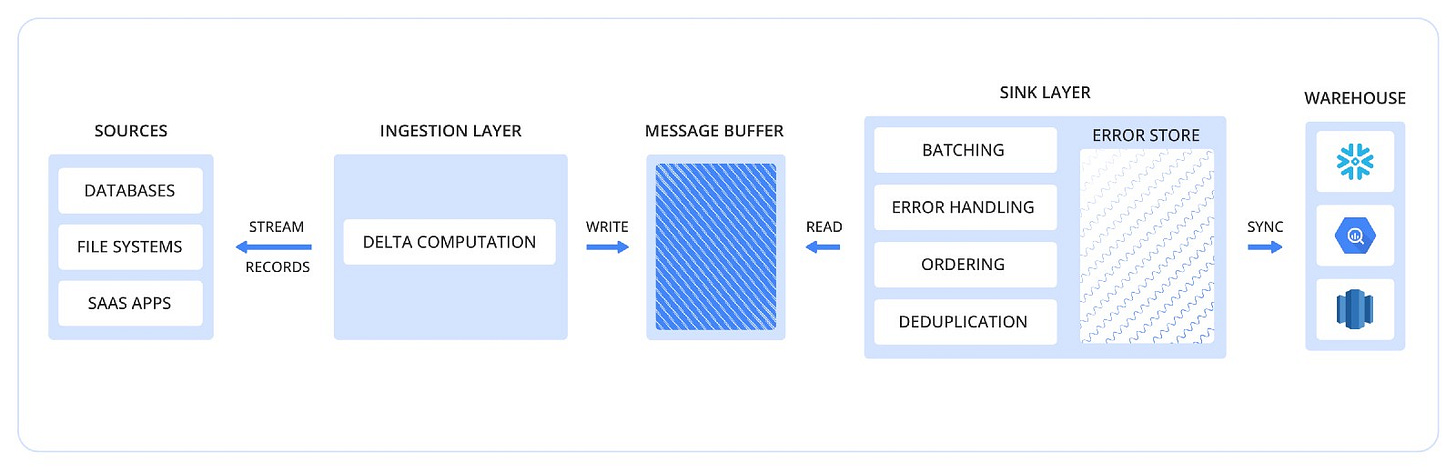

ELT framework

The ingestion and the sink layers in an ELT framework should be decoupled and work independently.

The Ingestion layer captures incremental updates from the source system and writes them to an intermittent message buffer (an ordered queue like Kafka or even a cloud file storage like S3/GCS).

The sink layer reads the records in order from the message buffer, batches them together, and runs a load job to sync the data to the warehouse.

The sink layer tracks sync failures and stores the failed records in an error store. It also takes care of ordering and deduplicating records in the data warehouse once the failed records are re-triggered.

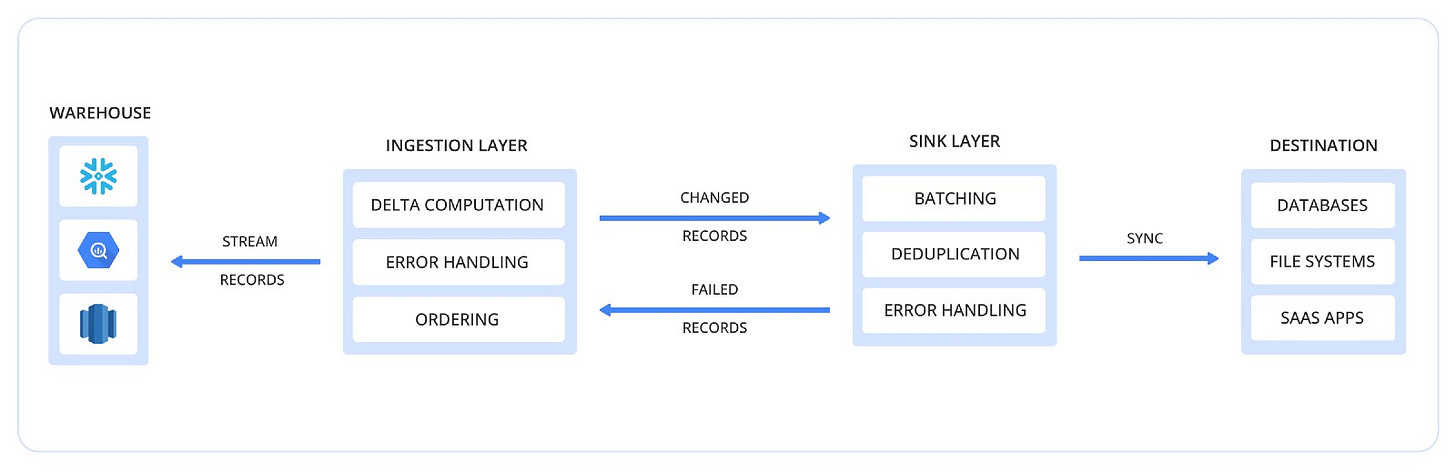

Reverse ETL framework

The Reverse ETL workload gets executed as a “single batch job,” where the ingestion and sink layers interact directly. The ingestion layer computes the changed records using snapshot tables on the data warehouse and passes the updates to the data sink.

The sink layer batches the records in-memory and calls the destination API to make the relevant inserts/updates based on the record’s unique identifier.

The sink layer classifies failures and passes failed records along with error reports back to the ingestion layer.

The ingestion layer then accounts for the failed records in the delta computation and takes care of re-ingesting those records in the subsequent pipeline runs.

The sink layer also makes error reports available to the user.

Closing thoughts

It’s important to note that the proposed architectures here are only meant to highlight the primary differences one needs to keep in mind when designing ELT and Reverse ETL frameworks.

I believe that both ELT and Reverse ETL are data pipeline solutions to sync data from a source to a destination reliably. But the product assumptions, limitations, and other acceptable tradeoffs change drastically with the position of the data warehouse in the pipeline.

Therefore, designing a generic framework to handle ELT and Reverse ETL would be an anti-pattern resulting in a sub-optimal experience for both use cases.

This, however, doesn’t imply that the same vendor cannot offer both products — in fact, I do think that ELT vendors will either build or buy Reverse ETL capabilities sooner rather than later.